One feature of a universe undergoing Flat Universal Granular Expansion is that the density of the universe is critical and that density is equal to the density of a Schwarzschild black hole with the radius equal to the age of the universe multiplied by the speed of light.

An obscured

assumption there is of a 3D black hole, but the universe has at least one more

dimension, since time and space are interchangeable under general relativity (see

On Time

for an example of this interchangeability).

So … the question arises, what happens with a black hole in more than 3

dimensions?

There’s

some disagreement as to whether black holes are already 4D, because they exist

in a 4D universe (with three dimensions of space and one of time) and they

persist over time. While most say yes, of course, I have seen the

occasional person say no, of course not – basically because there is an inherent

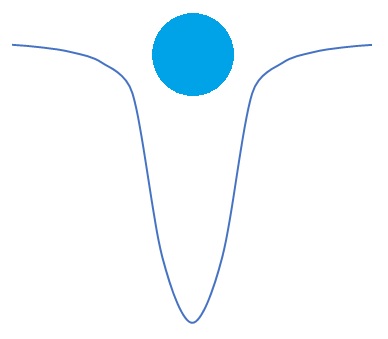

assumption that we only talk about spatial dimensions in relation to objects. We can’t imagine a . That’s not really the question I was

pondering though. I was thinking about

how mass would be distributed in a spatially 4D black hole, working from the

intuition that because there is more volume in the 3D surface of a 4D glome

than in a sphere, then there would need to be more mass.

This is

going about things the wrong way though.

Consider a

standard Schwarzschild black hole, a mass (MS) that is

neither charged nor rotating, in a volume defined by its radius (rS=2GM/c2). This is the point at which the escape

velocity is the speed of light, meaning that nothing, not even light can

escape.

We can show

this easily – to escape, a body must have more kinetic energy than its

gravitational potential energy, or

[K+U(r)=½mv2-GMm/r]>0

So, at the

limit where the kinetic energy is not quite enough,

½v2=GM/r

Since we

are talking about where the limit is the speed of light at rS, and

rearranging we get:

rS=2GM/c2

Note that we

are effectively talking about vectors here, a body must have a velocity, not

just a speed, in order to escape. In

other words, the body must have a velocity greater than v=c in the

direction of the separation from the mass M. Every point on the sphere defined by the

radius rS around the centre of mass MS

defines a vector from the locus of the sphere. The sphere, however, is not really relevant to

each body that is able or is not able to escape. All that matters is the vector from the centre

of the mass and the body’s own centre of mass.

The same

applies to a 4D black hole and also a hypothetical 2D black hole. The limit will always be given by rS=2GM/c2,

meaning that mass does not change. A 4D

(along with a hypothetical 2D) black hole has the same mass as a 3D black hole.

---

Note that

if we talk in terms of natural Planck units, the equation resolves to rS=2M,

because both G and c are effectively just exchange rates between units, and it

can be seen that the radius is two units of Planck length for every unit of

Planck mass. This could have been

intuited from the Mathematics for Imagining a Universe, where the conclusion that “mass … is

being added to the universe at the rate of half a Planck mass … per Planck time”

is reached, noting that in the scenario, that universe is expanding at the

speed of light.

---

Some may note that there is a problem. In On Time, I noted that the equation for kinetic energy is

an approximation. It is noted elsewhere

that Ek=½mv2 is a first (or second) order approximation.

As v->c, the approximation becomes less valid, as Ek=mc2.(1/√(1-v2/c2)-1)

tends towards infinity. The derivation

above explicitly uses v=c, so … it doesn’t work. (Other than the fact that, obviously, it

does.)

So, perhaps it’s better come at it from the other side. From outside of the black hole, using the

relativistic effects of gravitation.

Time is dilated and space is contracted (according to normal

parlance) in proportion to the force of gravity. The relevant equation is this:

t0=tf.√(1-(2GM/c2)/r)

Note that t0 is “proper time” between two

events observed from a distance of r from the centre of the mass M,

and tf is the “coordinate time” between the same two events observed

at distance of rf such that the gravitation acceleration

experienced (af=GM/rf2)≈0 (and assuming that no other effects are in play). This can be made even more complicated by

considering that the events must be either collocated or equidistant from both observation

locations, but we could just be a little less stringent, and say that tf

is “normal time” and that t0 is “affected time” (that is, affected

by gravitation due to proximity to the mass M).

As r decreases with increasing proximity to the mass M,

affected time between standard events (in the normal frame) gets shorter until,

when 1-(2GM/c2)/r=0, all normal events have no separation at

all. This represents a limit as you

can’t get less than no separation (and what you get instead if something were

to approach more closely to the mass M is the square root of a negative

number).

The value of r at this limit is, of course, r=rS=2GM/c2. Note that while we have used the value r,

we were not specifically talking about a radius, merely a separation. Therefore the same principles are mentioned

above apply.

---

But, of course, that equation is predicated on “the

Schwarzschild metric, which describes spacetime in the vicinity of a

non-rotating massive spherically symmetric object”, so it already assumes rS=2GM/c2.

---

So … we have to go deeper.

Looking at relativistic kinetic energy, we can

see that it’s not quite so simple. At relativistic

speeds, kinetic energy (K, to remain consistent with above) is given by:

K=√(p2c2+m2c4)-mc2

Where p is the linear momentum, p=mγv, where γ=1/√(1-v2/c2) which approaches infinity as

v->c. Substituting and rearranging

the above then, we get:

K=√((mγv)2c2+m2c4)-mc2=√(m2γ2v2c2+m2c4)-mc2

K=mc2(√(γ2v2/c2+1)-1)

But since γ=1/√(1-v2/c2),

and just focussing on the term within the square root for the sake of clarity:

γ2v2/c2+1=v2/c2/(1-v2/c2)+1=v2/(c2-v2)+1

=v2/(c2-v2)+(c2-v2)/(c2-v2)=c2/(c2-v2)=1/(1-v2/c2)

And so:

K=mc2(√(1/(1-v2/c2))-1)=

mc2.(γ-1)=mγc2-mc2

Which is the same equation as shown in On Time (just the terminology is different), which puts

us back to where we were before.

---

So, while the intuitive solution has some procedural difficulties,

we really need to go via the derivation of the Schwarzschild metric

to see that rS is the radius at which the metric is singular (in this case meaning that there

is a division by zero).

It is interesting that, in this case, using an approximation that is based on the assumption that v<<c works perfectly in a situation where v=c. Why that is the case is currently unclear to me.

---

Update: Having thought about it a little more and looking at

some other views on why the 2 factor is in the Schwarzschild radius, my

suspicion is that the curvature of space in the region around a black hole is

such that the escape velocity at any given distance from the black hole’s centre

of mass (where r>=rS) is given by v=√(2GM/r), even if that is the result you might reach simplistically (and by

making incorrect assumptions about the value of kinetic energy at relativistic speeds).

Here's my thinking –

the escape velocity at the Schwarzschild radius is, due to completely

different factors, of a value such that the following equation is true: ½mv2=GMm/r,

where m is irrelevant due to being on both sides of the equation, M is the mass of a black hole, v is the

escape velocity (in this case c) and r is the distance between

the body in question and the centre of the mass M (in this case the Schwarzschild

radius). At a nominal distance r

very far from the centre of the mass M, the kinetic energy of a mass m at escape velocity v is indistinguishable

from ½mv2 and is equal in value to the gravitational

potential energy GMm/r, so the equation ½mv2=GMm/r is

true (noting that, again, m is irrelevant).

Since we have picked a nominal distance, we could conceivably

pick another distance which is slightly closer to the centre of the black hole and

have no reason to think that ½mv2=GMm/r is no longer true. As we inch closer and closer to the black

hole, there is no point at which we should expect the equation to suddenly no

longer hold, particularly since we know that it holds at the very distance from

the black hole at which escape is simply no longer possible because we bump up

against the universal speed limit of c.

I accept that it’s not impossible that the escape velocity

might gradually depart from the classical equation (becoming lower in magnitude

due to the fact that we must consider relativity), only to pop back up to agreement

with classical notions right at the last moment. I don’t have a theory to account for that,

and have no intention to create one, but my not having a theory for something doesn’t

make it untrue. It just seems unlikely. To me.

.jpg)