On 5 September 2023, the climate scientist Patrick T Brown published an article at “The Free Press” which implied that he had perpetrated a Sokal style hoax on the journal Nature. His explicit claim was that an “unspoken rule in writing a successful climate paper (is that the) authors should ignore—or at least downplay—practical actions that can counter the impact of climate change.”

Note the title of the article, “I Left Out the Full Truth to Get My Climate

Change Paper Published” and the by-line “I just got published in

Nature because I stuck to a narrative I knew the editors would like. That’s not

the way science should work.” Note

also that the link includes the word “overhype”, indicating that the editor had

a different title in mind. This is

another claim in itself, although it doesn’t really appear in the text.

The paper he co-authored was Climate warming increases extreme daily

wildfire growth risk in California.

This all raises some key questions. Who is Patrick T Brown? Where does he hail from? And are his claims reasonable?

Patrick T Brown is, among other things, a co-director of the

Climate and Energy Group at the Breakthrough Institute.

This institute, established by Michael

Shellenberger and Ted Nordhaus, is focused on “ecomodernism”

which tends to be in favour of using technology to solve problems – replacing fossil

fuels with nuclear energy (not entirely bad), but resisting anything

approaching efforts to minimise current reliance on fossil fuels. To be cynical, they appear to be in the “we

don’t need to worry about climate change because we can fix it with out

technology” camp of climate deniers.

If it were true that there was a real effort by academia to

squash efforts to address climate change, this would indeed be a problem. We should do all the research, research into the

impact of human activities on the climate (which we can more easily moderate),

the effects of climate change and ways of mitigating the effects of climate

change. However, there are journals which

address different aspects of science.

What does Nature publish?

According to their website:

The criteria for publication of scientific papers (Articles)

in Nature are that they:

- report original scientific research (the main results and conclusions must not have been published or submitted elsewhere)

- are of outstanding scientific importance

- reach a conclusion of interest to an interdisciplinary readership.

Note that they don’t indicate that they publish articles on technological

developments (which is where much of the detail on efforts to mitigate climate

change would be expected to appear). However,

there is a journal in the Nature stable precisely for that, the open

access journal npj Climate Action.

So, the question is, did Patrick T Brown do any original scientific

research into other contributions to climate change? He doesn’t say so we don’t know.

Does Nature refuse to publish papers on natural

contributions to climate change?

No. Contribution of natural decadal variability to global warming

acceleration and hiatus. Indirect radiative forcing of climate change through ozone effects on

the land-carbon sink. Admittedly

this is old (about a decade), but there’s no indication that there is new original

research into other factors that has been rejected. There are newer papers on the effect of the

release of methane due to melting permafrost, such as this one from 2017: Limited contribution of permafrost carbon to methane release from

thawing peatlands.

Did Nature give any indication that they didn’t want

publish a paper that talked about other drivers of climate change? No, the opposite in fact. Hi co-author, Steven J Davis (reported at phys.org), said “we don't know

whether a different paper would have been rejected. … Keeping the focus narrow is often important

to making a project or scientific analysis tractable, which is what I thought

we did. I wouldn't call that 'leaving out truth' unless it was intended to

mislead—certainly not my goal.”

Nature provides visibility of the peer review

comments, available here, and in those comments, there

are references to other factors “that play a confounding role in wildfire

growth” and the fact that “(t)he climate change scenario only includes

temperature as input for the modified climate.”

Two of the reviewers rejected the paper, but neither of them did so on

the basis that it mentioned other factors than anthropogenic climate change.

In the rebuttal to the reviewer comments, the authors wrote:

We agree that climatic variables

other than temperature are important for projecting changes in wildfire risk.

In addition to absolute atmospheric humidity, other important variables include

changes in precipitation, wind patterns, vegetation, snowpack, ignitions,

antecedent fire activity, etc. Not to mention factors like changes in human

population distribution, fuel breaks, land use, ignition patterns, firefighting

tactics, forest management strategies, and long-term buildup of fuels.

Accounting for changes in all of

these variables and their potential interactions simultaneously is very difficult.

This is precisely why we chose to use a methodology that addresses the much

cleaner but more narrow question of what the influence of warming alone is on

the risk of extreme daily wildfire growth.

We believe that studying the

influence of warming in isolation is valuable because temperature is the variable

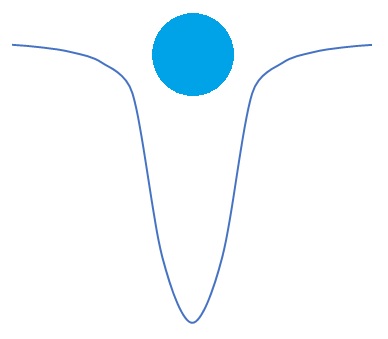

in the wildfire behavior triangle (Fig 1A) that is by far the most directly

related to increasing greenhouse gas concentrations and, thus, the most

well-constrained in future projections. There is no consensus on even the

expected direction of the change of many of the other relevant variables.

So the decision to make the study very narrow, in their (or

his) own words, was made on the basis of ease and clarity, not to overcome publishing

bias. Perhaps Patrick T Brown was lying. But there would be little point, since the

paper’s authors write:

Our findings, however, must be

interpreted narrowly as idealized calculations because temperature is only one

of the dozens of important variables that influences wildfire behaviour.

So, that’s true. Like

much of science, it’s all about trying to eliminate confounding factors and

working out what the effect of one factor is (or a limited number of factors). In this case, the authors have (with

assistance of machine learning) come to the staggering conclusion that if

forests are warmer and drier, they burn more.

The main criticism that could be made is that Nature published a

paper with such a mundane result.

However, the mechanism, using machine learning, is potentially

interesting. It could easily contribute

to modelling – both in predicting the outcomes of various existing models and

potentially by being redeployed to improve existing models (or create new and

better models).

It’s a bizarre situation.

Why did Patrick T Brown, as a climate scientist, do this? Maybe he has been prevented

from publishing something in the past.

Perhaps his new institute (or group) has been prevented from publishing

something. That would be interesting to

know.

Or is it something else?

Well, if you search hard enough, you can find that Patrick T

Brown has posted at Judith Curry’s blog back when he was a PhD student. And if you look at Judith Curry, you will

find that she is what Michael Mann labelled a delayer

– “delayers claim to accept the science, but downplay the seriousness of the

threat or the need to act”.

Is it merely coincidence that the Breakthrough Institute for

whom Patrick T Brown works, and his fellow ecomodernists, are also the types who

appear to accept the science, but downplay the seriousness of the threat of

climate change and the need to act, or at least criticise all current efforts

to act?

---

My own little theory is that Patrick T Brown was not so much

involved in scoring an own goal in the climate science field, but that he was

attempting deliberate sabotage.